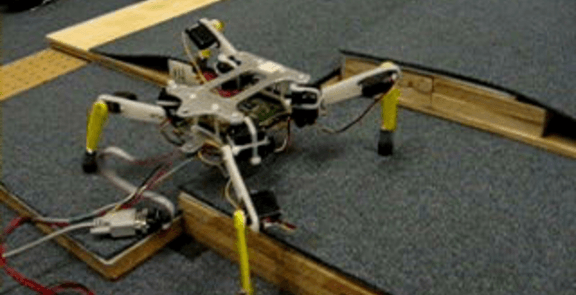

Quadruped Robot Obstacle Negotiation Via Reinforcement Learning

Legged robots can, in principle, traverse a large variety of obstacles and terrains. In this paper, we describe a successful application of reinforcement learning to the problem of negotiating obstacles with a quadruped robot. Our algorithm is based on a two-level hierarchical decomposition of the task, in which the high-level controller selects the sequence of footplacement positions, and the low-level controller generates the continuous motions to move each foot to the specified positions. The high-level controller uses an estimate of the value function to guide its search; this estimate is learned partially from supervised data. The low-level controller is obtained via policy search. We demonstrate that our robot can successfully climb over a variety of obstacles which were not seen at training time.