Learning to Grasp Novel Objects Using Vision

Abstract

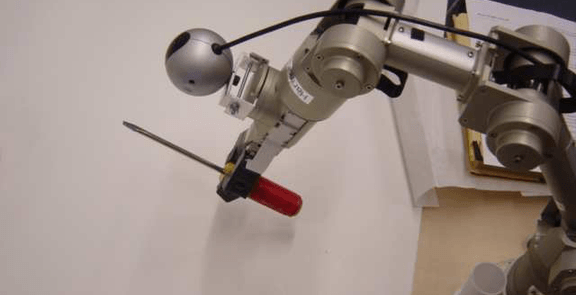

We consider the problem of grasping novel objects, specifically, ones that are being seen for the first time through vision. We present a learning algorithm which predicts, as a function of the images, the position at which to grasp the object. This is done without building or requiring a 3-d model of the object. Our algorithm is trained via supervised learning, using synthetic images for the training set. Using our robotic arm, we successfully demonstrate this approach by grasping a variety of differently shaped objects, such as duct tape, markers, mugs, pens, wine glasses, knife-cutters, jugs, keys, toothbrushes, books, and others, including many object types not seen in the training set.