Learning Sound Location from a Single Microphone

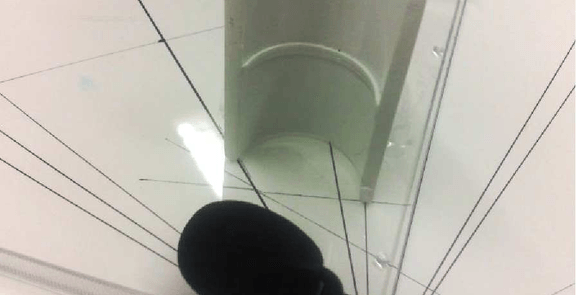

We consider the problem of estimating the in- cident angle of a sound, using only a single microphone. The ability to perform monaural (single-ear) localization is important to many animals; indeed, monaural cues are also the primary method by which humans decide if a sound comes from the front or back, as well as estimate its elevation. Such monaural localization is made possible by the structure of the pinna (outer ear), which modifies sound in a way that is dependent on its incident angle. In this paper, we propose a machine learning approach to monaural localization, using only a single microphone and an “artificial pinna” (that distorts sound in a direction-dependent way). Our approach models the typical distribution of natural and artificial sounds, as well as the direction-dependent changes to sounds induced by the pinna. Our experimental results also show that the algorithm is able to fairly accurately localize a wide range of sounds, such as human speech, dog barking, waterfall, thunder, and so on. In contrast to microphone arrays, this approach also offers the potential of significantly more compact, as well as lower cost and power, devices for sounds localization.