Learning Omnidirectional Path Following Using Dimensionality Reduction

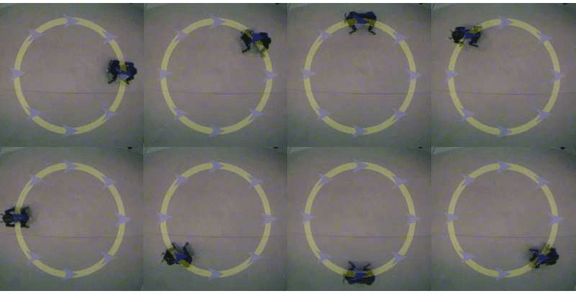

We consider the task of omnidirectional path fol- lowing for a quadruped robot: moving a four-legged robot along any arbitrary path while turning in any arbitrary manner. Learning a controller capable of such motion requires learning the parameters of a very high-dimensional policy, a difficult task on a real robot. Although learning such a policy can be much easier in a model (or “simulator”) of the system, it can be extremely difficult to build a sufficiently accurate simulator. In this paper we propose a method that uses a (possibly inaccurate) simulator to identify a low-dimensional subspace of policies that spans the variations in model dynamics. This subspace will be robust to variations in the model, and can be learned on the real system using much less data than would be required to learn a policy in the original class. In our approach, we sample several models from a distribution over the kinematic and dynamics parameters of the simulator, then formulate an optimization problem that can be solved using the Reduced Rank Regression (RRR) algorithm to construct a low-dimensional class of policies that spans the major axes of variation in the space of controllers. We present a successful application of this technique to the task of omnidirectional path following, and demonstrate improvement over a number of alternative methods, including a hand-tuned controller. We present, to the best of our knowledge, the first controller capable of omnidirectional path following with parameters optimized simultaneously for all directions of motion and turning rates.