Learning Deep Energy Models

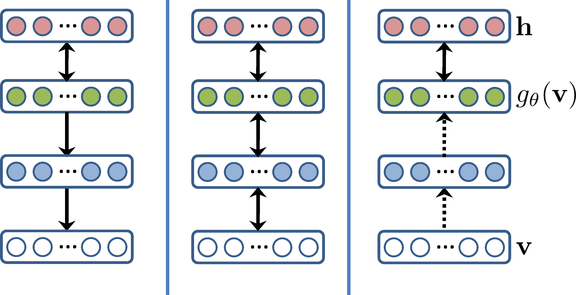

Deep generative models with multiple hidden layers have been shown to be able to learn meaningful and compact representations of data. In this work we propose deep energy models, which use deep feedforward neural networks to model the energy landscapes that define probabilistic models. We are able to efficiently train all layers of our model simultaneously, allowing the lower layers of the model to adapt to the training of the higher layers, and thereby producing better generative models. We evaluate the generative performance of our models on natural images and demonstrate that this joint training of multiple layers yields qualitative and quantitative improvements over greedy layerwise training. We further generalize our models beyond the commonly used sigmoidal neural networks and show how a deep extension of the product of Student-t distributions model achieves good generative performance. Finally, we introduce a discriminative extension of our model and demonstrate that it outperforms other fully-connected models on object recognition on the NORB dataset.