Integrating Visual and Range Data for Robotic Object Detection

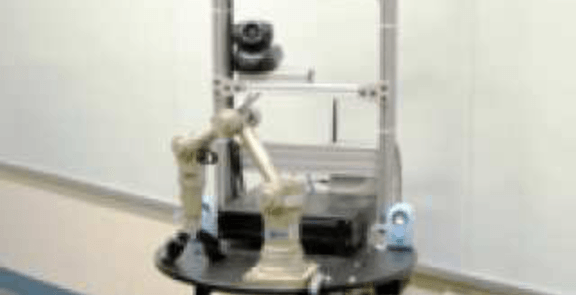

The problem of object detection and recognition is a notori- ously difficult one, and one that has been the focus of much work in the computer vision and robotics communities. Most work has concentrated on systems that operate purely on visual inputs (i.e., images) and largely ignores other sensor modalities. However, despite the great progress made down this track, the goal of high accuracy object detection for robotic platforms in cluttered real-world environments remains elusive. Instead of relying on information from the image alone, we present a method that exploits the multiple sensor modalities available on a robotic platform. In particular, our method augments a 2-d object detector with 3-d information from a depth sensor to produce a “multi-modal object detector.” We demonstrate our method on a working robotic system and evaluate its performance on a number of common household/office objects.