Autonomous Operation of Novel Elevators for Robot Navigation

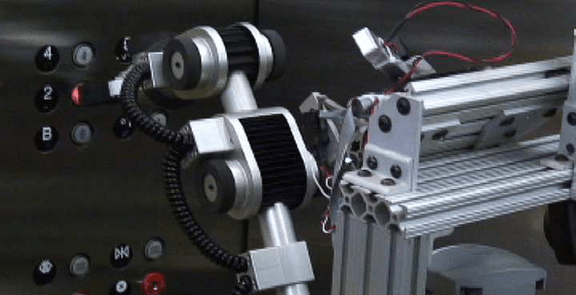

Although robot navigation in indoor environments has achieved great success, robots are unable to fully navigate these spaces without the ability to operate elevators, including those which the robot has not seen before. In this paper, we focus on the key challenge of autonomous interaction with an unknown elevator button panel. A number of factors, such as lack of useful 3D features, variety of elevator panel designs, variation in lighting conditions, and small size of elevator buttons, render this goal quite difficult. To address the task of detecting, localizing, and labeling the buttons, we use state-of-the-art vision algorithms along with machine learning techniques to take advantage of contextual features. To verify our approach, we collected a dataset of 150 pictures of elevator panels from more than 60 distinct elevators, and performed extensive offline testing. On this very diverse dataset, our algorithm succeeded in correctly localizing and labeling 86.2% of the buttons. Using a mobile robot platform, we then validate our algorithms in experiments where, using only its on-board sensors, the robot autonomously interprets the panel and presses the appropriate button in elevators never seen before by the robot. In a total of 14 trials performed on 3 different elevators, our robot succeeded in localizing the requested button in all 14 trials and in pressing it correctly in 13 of the 14 trials.